I recently spoke at a conference and was asked what is the easiest way to import databases to Azure SQL Database. Therefore, I wanted to share how I do this with DBATools.io. You can use the same code to just export if you need a local copy of an Azure SQL database as well.

Import-Module dbatools -Force

<# Variables #>

$BackupPath = "C:\Demo\AzureSQL\Bacpac" #folder location for backups

$SourceInstance = "sql2019\sql2016"

$DBName = "AdventureWorksLT2012"

$AzureDestInstance = "procuresqlsc.database.windows.net"

$DBNameDest = $DBName

<# backpac options for import and export #>

$option = New-DbaDacOption -Type Bacpac -Action Export

$option.CommandTimeout = 0

$option2 = New-DbaDacOption -Type Bacpac -Action Publish

$option2.CommandTimeout = 0

<#

The following assums Azure SQL Database exists and is empty

Azure will create database by default if it doesn't exist

#>

$bacpac = Export-DbaDacPackage -Type Bacpac -DacOption $option -Path `

$BackupPath -SqlInstance $SourceInstance -Database $DBName

Publish-DbaDacPackage -Type Bacpac -SqlInstance `

$AzureDestInstance -Database $DBNameDest -Path $bacpac.path `

-DacOption $option2 -SqlCredential username

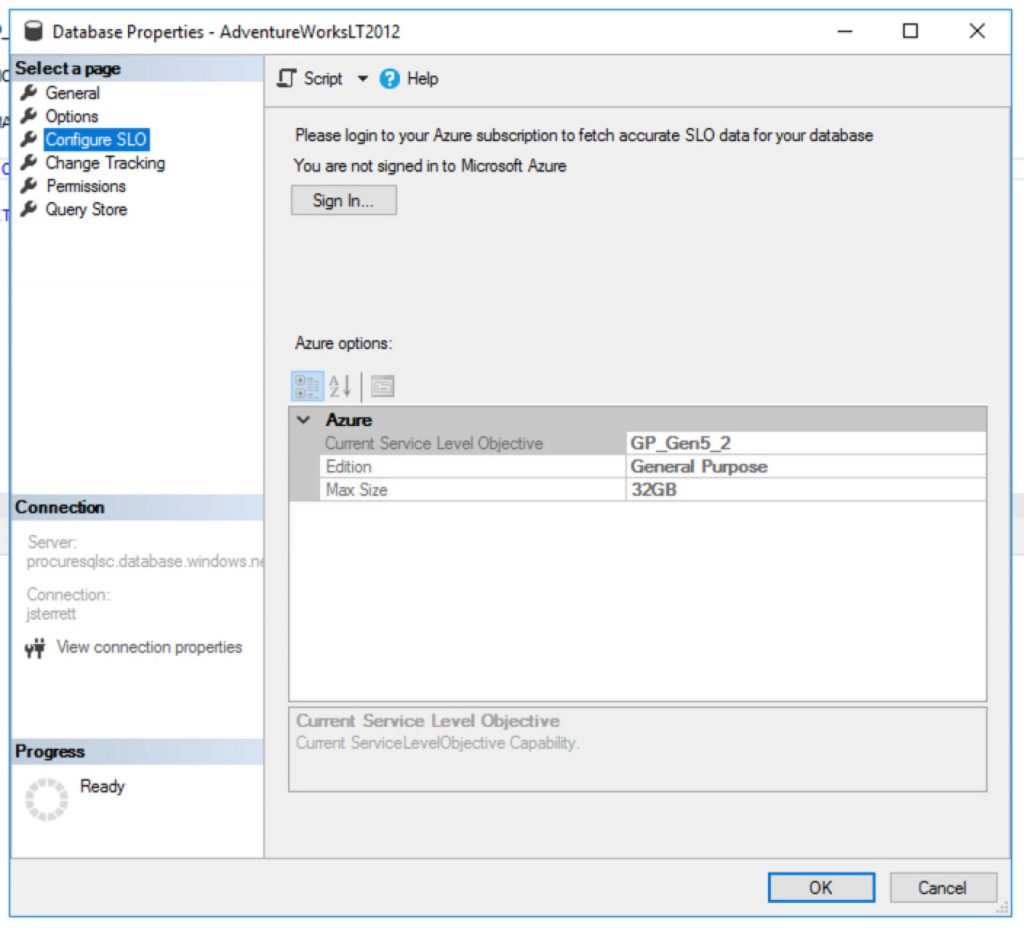

What Is my Performance Tier?

Great question, as of 3/3/2020 if the database in Azure SQL Database does not exist then it will be created. When its created the following database uses the default performance tier. This is General Purpose (Gen5) with 2 vCores.

How to create cheaper databases

Great question, you can import databases to Azure SQL Database cheaper using PowerShell. It is as simple as using the Azure PowerShell Module. The following example below I use my existing Azure SQL Database server and I end up creating a new database with the “S0” tier.

<#

Install Azure Powershell module requires local admin

Install-Module -Name Az -AllowClobber -Scope AllUsers

#>

Import-Module Az -Force

$RGName = "<Your Existing Resource Group>"

$ServerName = "<Your Azure SQL Database Server>"

$Tenant = "<Add Tenant ID>"

$Subscription = "<Add your Subscription ID>"

Connect-AzAccount -Tenant $Tenant -SubscriptionId $Subscription

$server = Get-AzSqlServer -ResourceGroupName $RGName -ServerName $ServerName

$db = New-AzSqlDatabase -ResourceGroupName $RGName `

-ServerName $server.ServerName -DatabaseName $DBName `

-RequestedServiceObjectiveName "S0"Your Homework Assignment

You got all the nuts and bolts to generate a script that can migrate all your databases on an instance. Then you can import databases to Azure SQL Database in one loop.

Need Help Moving to Azure?

Let us help you! Schedule a free 30-minute chat to see if we can point you the right direction. Take advantage of our free resources too.

If you liked this blog post signup for our free newsletter!